☰

🔍

☰

🔍

20 Juni 2024

Posted by Paris Hsu – Product Manager, Android Studio

Posted by Paris Hsu – Product Manager, Android Studio

We shared an exciting live demo from the Developer Keynote at Google I/O 2024 where Gemini transformed a wireframe sketch of an app's UI into Jetpack Compose code, directly within Android Studio. While we're still refining this feature to make sure you get a great experience inside of Android Studio, it's built on top of foundational Gemini capabilities which you can experiment with today in Google AI Studio.

Specifically, we'll delve into:

Note: Google AI Studio offers various general-purpose Gemini models, whereas Android Studio uses a custom version of Gemini which has been specifically optimized for developer tasks. While this means that these general-purpose models may not offer the same depth of Android knowledge as Gemini in Android Studio, they provide a fun and engaging playground to experiment and gain insight into the potential of AI in Android development.

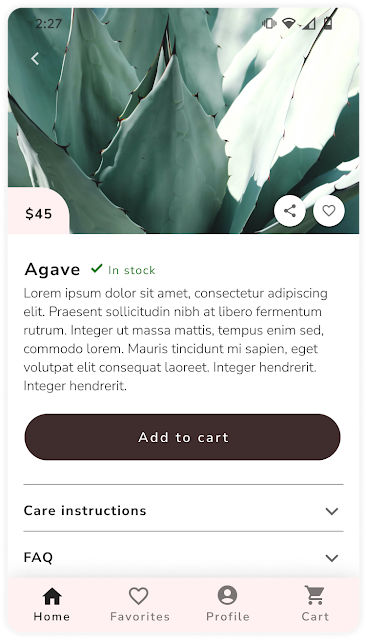

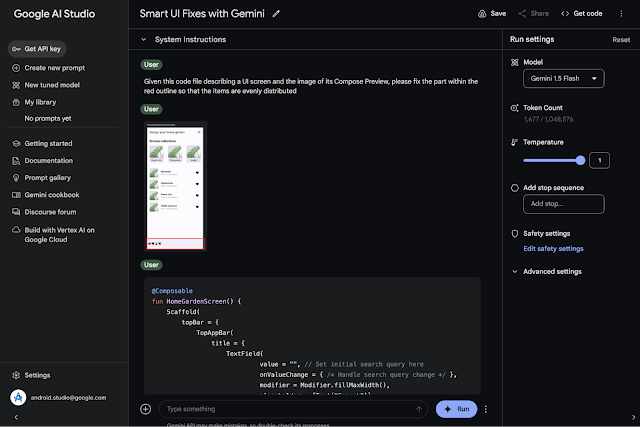

First, to turn designs into Compose UI code: Open the chat prompt section of Google AI Studio, upload an image of your app's UI screen (see example below) and enter the following prompt:

"Act as an Android app developer. For the image provided, use Jetpack Compose to build the screen so that the Compose Preview is as close to this image as possible. Also make sure to include imports and use Material3."

Then, click "run" to execute your query and see the generated code. You can copy the generated output directly into a new file in Android Studio.

With this experiment, Gemini was able to infer details from the image and generate corresponding code elements. For example, the original image of the plant detail screen featured a "Care Instructions" section with an expandable icon — Gemini's generated code included an expandable card specifically for plant care instructions, showcasing its contextual understanding and code generation capabilities.

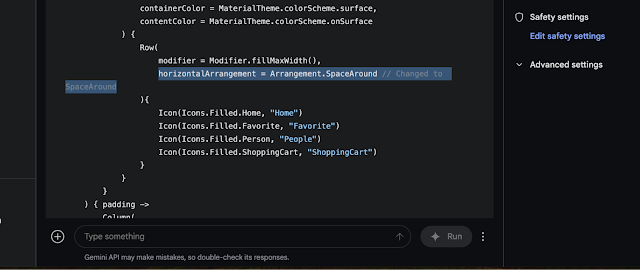

Inspired by "Circle to Search", another fun experiment you can try is to "circle" problem areas on a screenshot, along with relevant Compose code context, and ask Gemini to suggest appropriate code fixes.

You can explore with this concept in Google AI Studio:

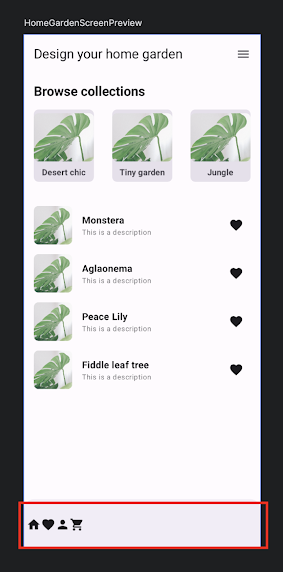

1. Upload Compose code and screenshot: Upload the Compose code file for a UI screen and a screenshot of its Compose Preview, with a red outline highlighting the issue—in this case, items in the Bottom Navigation Bar that should be evenly spaced.

2. Prompt Gemini: Open the chat prompt section and enter

"Given this code file describing a UI screen and the image of its Compose Preview, please fix the part within the red outline so that the items are evenly distributed."

3. Gemini's solution: Gemini returned code that successfully resolved the UI issue.

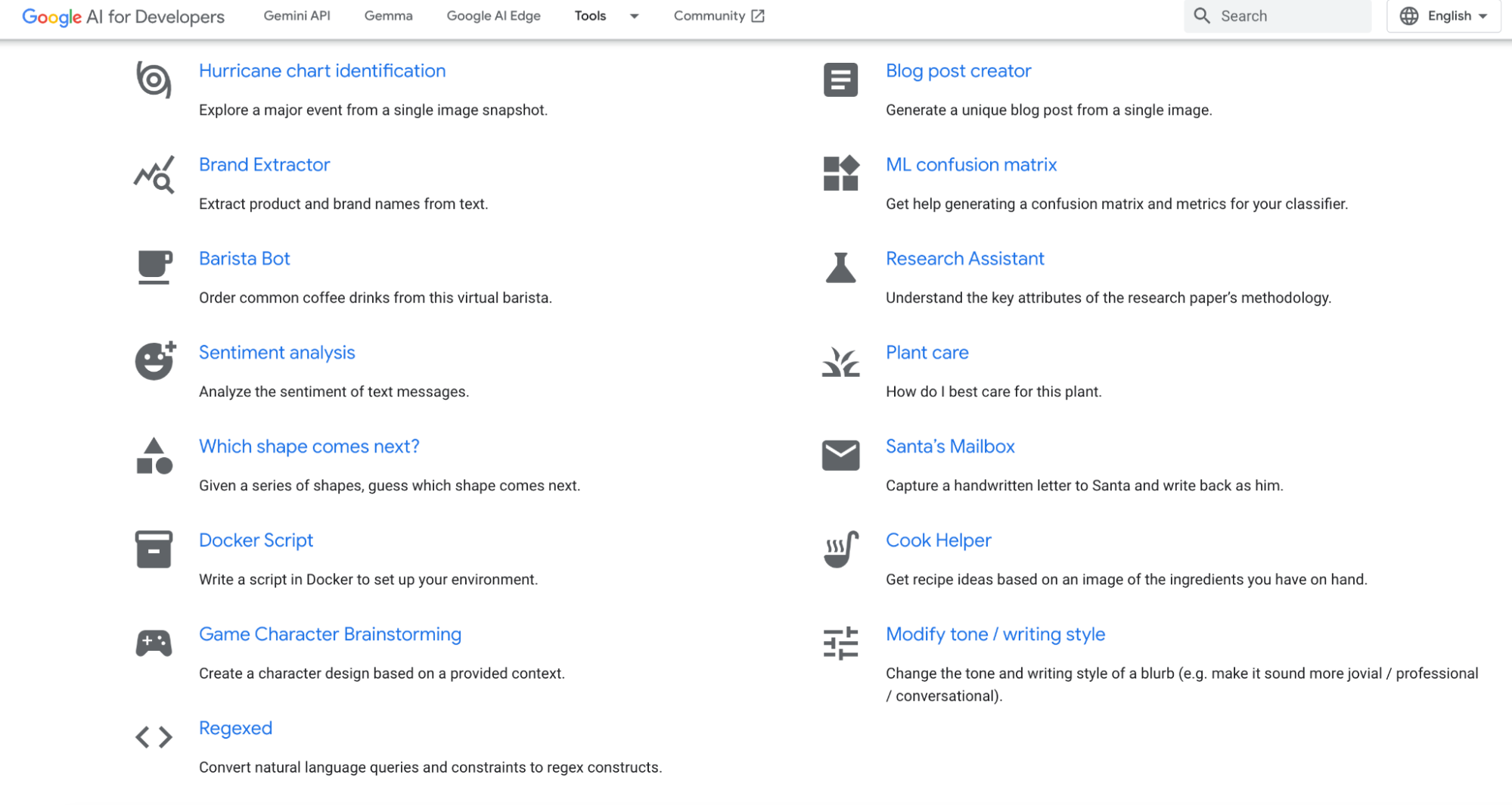

Gemini can streamline experimentation and development of custom app features. Imagine you want to build a feature that gives users recipe ideas based on an image of the ingredients they have on hand. In the past, this would have involved complex tasks like hosting an image recognition library, training your own ingredient-to-recipe model, and managing the infrastructure to support it all.

Now, with Gemini, you can achieve this with a simple, tailored prompt. Let's walk through how to add this "Cook Helper" feature into your Android app as an example:

1. Explore the Gemini prompt gallery: Discover example prompts or craft your own. We'll use the "Cook Helper" prompt.

2. Open and experiment in Google AI Studio: Test the prompt with different images, settings, and models to ensure the model responds as expected and the prompt aligns with your goals.

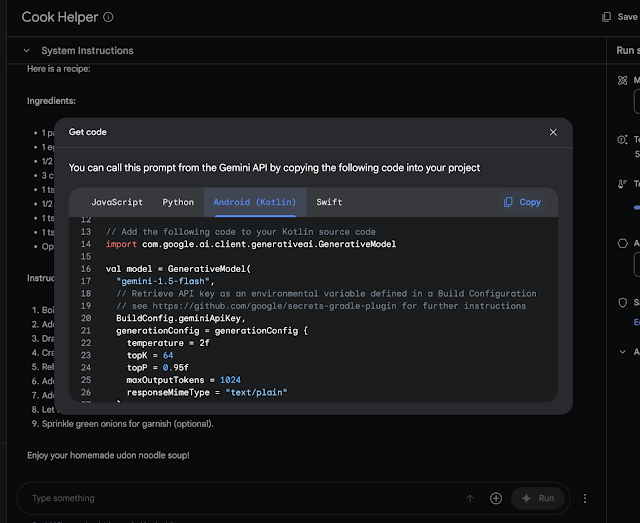

3. Generate the integration code: Once you're satisfied with the prompt's performance, click "Get code" and select "Android (Kotlin)". Copy the generated code snippet.

4. Integrate the Gemini API into Android Studio: Open your Android Studio project. You can either use the new Gemini API app template provided within Android Studio or follow this tutorial. Paste the copied generated prompt code into your project.

That's it - your app now has a functioning Cook Helper feature powered by Gemini. We encourage you to experiment with different example prompts or even create your own custom prompts to enhance your Android app with powerful Gemini features.

While these experiments are promising, it's important to remember that large language model (LLM) technology is still evolving, and we're learning along the way. LLMs can be non-deterministic, meaning they can sometimes produce unexpected results. That's why we're taking a cautious and thoughtful approach to integrating AI features into Android Studio.

Our philosophy towards AI in Android Studio is to augment the developer and ensure they remain "in the loop." In particular, when the AI is making suggestions or writing code, we want developers to be able to carefully audit the code before checking it into production. That's why, for example, the new Code Suggestions feature in Canary automatically brings up a diff view for developers to preview how Gemini is proposing to modify your code, rather than blindly applying the changes directly.

We want to make sure these features, like Gemini in Android Studio itself, are thoroughly tested, reliable, and truly useful to developers before we bring them into the IDE.

We invite you to try these experiments and share your favorite prompts and examples with us using the #AndroidGeminiEra tag on X and LinkedIn as we continue to explore this exciting frontier together. Also, make sure to follow Android Developer on LinkedIn, Medium, YouTube, or X for more updates! AI has the potential to revolutionize the way we build Android apps, and we can't wait to see what we can create together.